Updates

- The blogs for Class 5 and Class 6 are now posted.

- Every project team should have received feedback on your Project Idea. The next main deliverable for the project is your Project Mini-Proposal, which is due Monday, 16 February. (This will be discussed in class on Tuesday.)

Reading for Tuesday, 10 February (repeated from Readings for Week 4)

- Cynthia Rudin. Stop explaining black box machine learning

models for high stakes decisions and use

interpretable models instead. Nature Machine Intelligence, May 2019. [PDF Link] [arXiv version (less nicely formatted, but with fixed equations)]

Reading for Thursday, 12 February:

- Milad Nasr, Javier Rando, Nicholas Carlini, Jonathan Hayase, Matthew Jagielski, A. Feder Cooper, Daphne Ippolito, Christopher A. Choquette-Choo, Florian Tramèr, and Katherine Lee. Scalable extraction of training data from aligned, production language models. In International Conference on Learning Representations (ICLR) 2025.

ICLR Web Link. (You can also see the review discussion: ICLR Forum)

One of the authors of this paper, Matthew Jagielski (now at Anthropic), will visit UVA on Friday, 13 February and give a Distinguished Talk at 11:00am Friday, 13 February, in Rice 540.

Slides

Class 6 Slides (PDF)

Schedule

Due Tomorrow (Wednesday 4 February, 11:59pm): Project Idea (Assignment in Canvas)

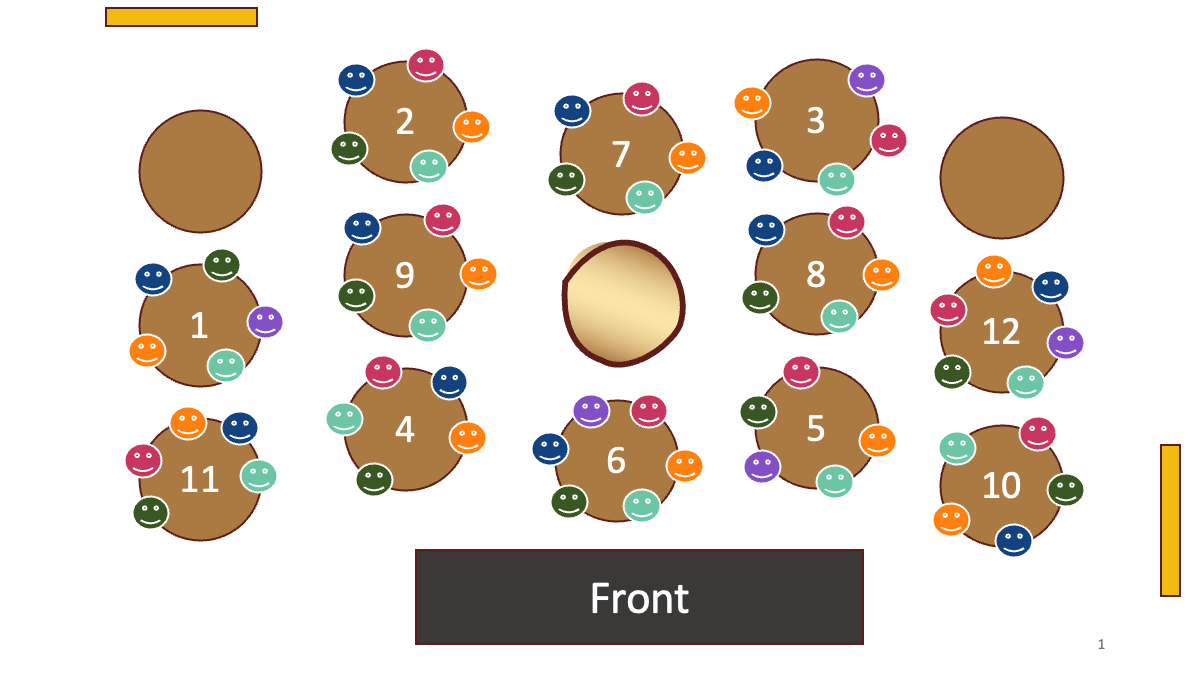

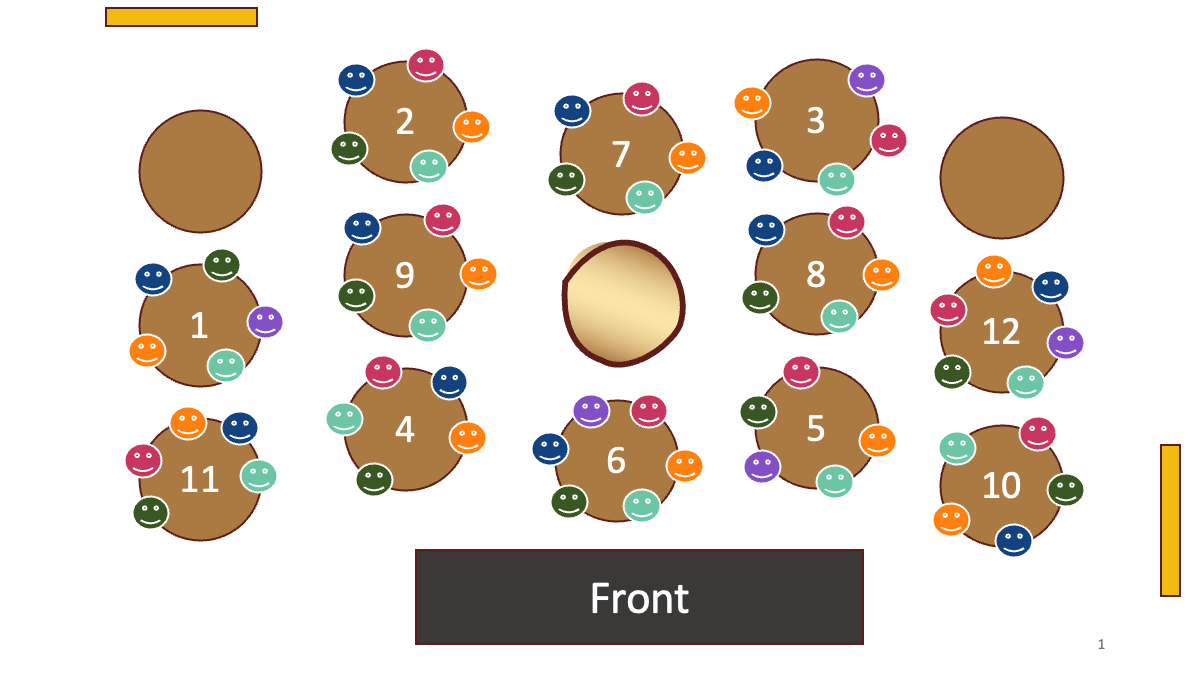

Here’s the Table Teams map for this week (Feb 3 and Feb 5 classes):

Clinical use of AI in Medicine

Reading for Tuesday, 3 February (repeated from Previous Post, but with date updated due to snow day):

- Ethan Goh, Robert J. Gallo, Eric Strong, Yingjie Weng, Hannah Kerman, Jason A. Freed, Joséphine A. Cool, Zahir Kanjee, Kathleen P. Lane, Andrew S. Parsons, Neera Ahuja, Eric Horvitz, Daniel Yang, Arnold Milstein, Andrew P. J. Olson, Jason Hom, Jonathan H. Chen and Adam Rodman.

GPT-4 assistance for improvement of physician performance on patient care tasks: a randomized controlled trial. Nature Medicine, February 2025. [PDF Link] [Web Link]

AI Bias and Interpretability

Our next main topic, which will be for the Thursday, 5 February and Tuesday, 10 February, is on biases in AI systems and how to measure and mitigate them. There is already a vast literature on this topic, and entire courses and research agendas focused on it, so we will only see a small slice of it in these two class (and may have more classes later that go in more depth or touch on other aspects).

Readings for Thursday, 5 February:

Some additional readings (that are not expected for everyone, but are optional, and potentially readings the Lead Team will include):

Readings for Tuesday, 10 February:

- Cynthia Rudin. Stop explaining black box machine learning

models for high stakes decisions and use

interpretable models instead. Nature Machine Intelligence, May 2019. [PDF Link] [arXiv version (less nicely formatted, but with fixed equations)]

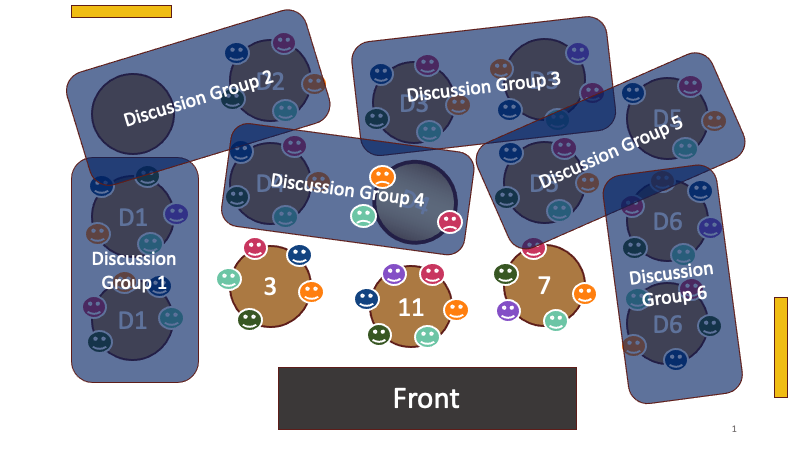

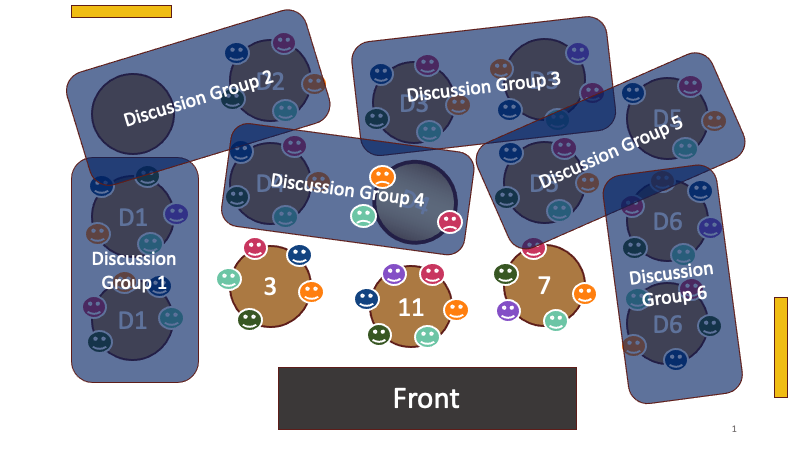

Here’s the Table Teams map for Jan 29:

Instead of sitting in your “Table Teams”, the teams that are not presenting or blogging today will sit in your discussion groups so you have a chance to meet the people in your on-line discussion in person.